There are several different ways to generate a table or series of numbers between two given values. Say that you wanted to populate a table with an integer column and the values should be sequential integers between 1,000 and 1,000,000. Below I cover three different methods of accomplishing this task. The last method covers using the GENERATE_SERIES function that is new to SQL Server 2022.

Method 1: Using a recursive CTE

One way that you could do this is by using a recursive CTE (common table expression) like this:

--*************************************************************************

-- All numbers between 1000 and 1,000,000

-- using a recursive CTE

--*************************************************************************

declare @Start int = 1000;

declare @End int = 1000000;

with cte as

(

select @Start as [Num]

union all

select Num + 1 from cte where [Num] < @End

)

select

[Num]

from cte

option (maxrecursion 0);

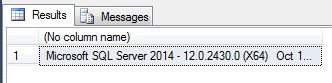

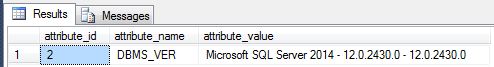

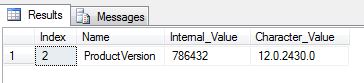

This produces the following result:

Method 2: Harnessing the power of a Cartesian Join

Another way that you could accomplish this is by harnessing the power of a Cartesian join – yes, that thing that you usually do by accident!

--*************************************************************************

-- All numbers between 1000 and 1,000,000

-- without a recursive CTE

--*************************************************************************

declare @Start int = 1000;

declare @End int = 1000000;

with cte as

(

SELECT ones.n + (tens.n * 10) + (hundreds.n * 100) + (thousands.n * 1000) + (tenthousands.n * 10000) + (hundredthousands.n * 100000) + (millions.n * 1000000) + (tenmillions.n * 10000000) as [Num]

FROM ( VALUES (0), (1), (2), (3), (4), (5), (6), (7), (8), (9) ) ones(n)

cross apply ( VALUES (0), (1), (2), (3), (4), (5), (6), (7), (8), (9) ) tens(n)

cross apply ( VALUES (0), (1), (2), (3), (4), (5), (6), (7), (8), (9) ) hundreds(n)

cross apply ( VALUES (0), (1), (2), (3), (4), (5), (6), (7), (8), (9) ) thousands(n)

cross apply ( VALUES (0), (1), (2), (3), (4), (5), (6), (7), (8), (9) ) tenthousands(n)

cross apply ( VALUES (0), (1), (2), (3), (4), (5), (6), (7), (8), (9) ) hundredthousands(n)

cross apply ( VALUES (0), (1), (2), (3), (4), (5), (6), (7), (8), (9) ) millions(n)

cross apply ( VALUES (0), (1), (2), (3), (4), (5), (6), (7), (8), (9) ) tenmillions(n)

where ones.n + (tens.n * 10) + (hundreds.n * 100) + (thousands.n * 1000) + (tenthousands.n * 10000) + (hundredthousands.n * 100000) + (millions.n * 1000000) + (tenmillions.n * 10000000) <= @End

)

select

[Num]

from cte

order by [Num] asc;

This produces the same result as the first method:

Method 3: Using GENERATE_SERIES instead:

But new in 2022, SQL Server now has a GENERATE_SERIES function that you can use to produce the same results with much less code.

GENERATE_SERIES generates a series of numbers within a given interval. The interval and the step between series values are defined by the user. By using this built-in function you can generate the same results as above with a single line of code:

select value as [Num] from GENERATE_SERIES(1000, 1000000, 1);

And finally we see the same result as the first two methods… just with MUCH less typing:

Arguments passed to this function:

start

The first parameter that you pass to the function is start. The start parameter specifies the start value of the series that will be generated by the function. In our example above we specified 1,000 as the start because we wanted to generate a series of numbers between 1,000 and 1,000,000, making 1,000 the starting number. This parameter can be specified as a variable, a literal, or a scalar expression of type tinyint, smallint, int, bigint, decimal, or numeric.

stop

The second parameter that you pass to the function is the stop. The stop parameter specifies the end value of the series that will be generated by the function. In our example above we specified 1,000,000 as the end because we wanted the series to stop at one million. This parameter can be specified as a variable, a literal, or a scalar expression of type tinyint, smallint, int, bigint, decimal, or numeric. The series stops once the last generated step value exceeds the stop value.

The data type for stop must match the data type for start.

[ step ]

The last parameter that is passed to the function Indicates the number of values to increment or decrement between steps in the series. step is an expression of type tinyint, smallint, int, bigint, decimal, or numeric. step can be either negative or positive, but can’t be zero (0). In the example above we wanted to have sequential number without any gaps. But if we wanted only even numbers we could have specified “2” as our step value and then each additional number would have stepped up by a value of 2.

This argument is optional. The default value for step is 1 if start is less than stop, otherwise, the default value is -1 if start is greater than stop.

If start is less than stop and a negative value is specified for step, or if start is greater than stop and a positive value is specified for step, an empty result set is returned.

Much simpler! You can find the Microsoft page for this function here